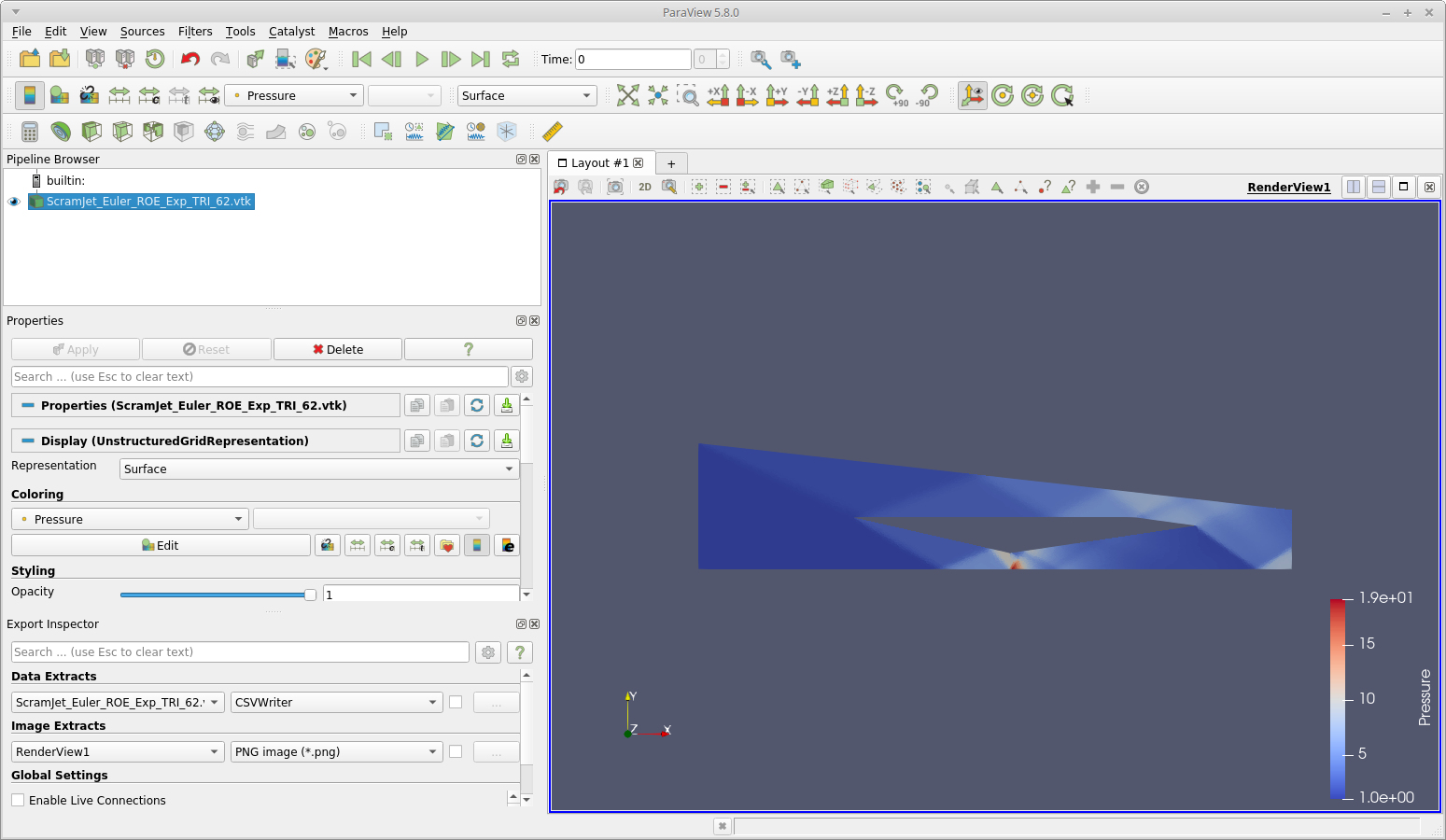

Paraview connectivity software#

It exports models that are interoperable with VR development software so that visualization effects can be easily employed to occluded objects. The framework determines the visibility status of an object by converting the object to a point cloud and comparing the point cloud to the virtual laser scanning result of the original model.

This paper proposes an occlusion detection framework that semi-automatically identifies occluded objects in 3D construction models. Currently, visualizing occluded objects in VR is a challenge, and this function is indispensable for construction design review and coordination. Virtual Reality (VR)-based construction design review applications have shown potential to enhance user performance in many research projects and experiments. Although some challenges such as device form factor, validation in a wider range of environments, and direct sunlight interference remain before routine field deployment can take place, animal-borne depth sensing and visual–inertial odometry have great potential as visual biologging techniques to provide new insights on how terrestrial animals interact with their environments. The device pose (position and orientation) also enabled recreation of the animal’s movement path within the 3D model. Using data collected from an animal-borne device, the present study demonstrates how terrain and objects (in this case, tree trunks) surrounding a subject can be identified by model segmentation. A first-person view of the 3D model was created, illustrating the coupling of both animal movement and environment reconstruction. In addition, ground-truth tree trunk radius measurements were not significantly different from random sample consensus model coefficient-derived values. Individual points were labelled as belonging to tree trunks with a precision, recall, and Fβ\documentclass score of 1.00, 0.88, and 0.93, respectively. The predicted and actual presence of trees matched closely, with an object-level accuracy of 93.3%. Trees trunks were modelled as cylinders and identified by random sample consensus. The forest floor was labelled using a progressive morphological filter. This report trials an animal-attached structured light depth-sensing and visual–inertial odometry motion-tracking device in an outdoor environment (coniferous forest) using the domestic dog (Canis familiaris) as a compliant test species.Ī 3D model of the forest environment surrounding the subject animal was successfully constructed using point clouds. The potential of such techniques has yet to be explored in the field of animal biotelemetry. These enable the construction of detailed 3D models of an environment within which motion can be tracked without reliance on GPS. Recent advances in robotics and computer vision have led to the availability of integrated depth-sensing and motion-tracking mobile devices.

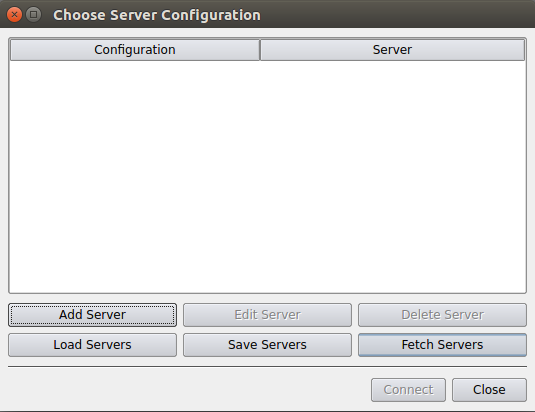

Paraview connectivity drivers#

However, such tags usually lack information on the structure of the surrounding environment from the perspective of a study animal and thus may be unable to identify potentially important drivers of behaviour. Therefore, the designed inspection system is practical for in-factory applications because of its one-shot static methodology that uses only a simple cubic target and detection of the alignment error with high accuracy.Īnimal-attached sensors are increasingly used to provide insights on behaviour and physiology. Experimental evaluation of the proposed method with a low-resolution LiDAR (Velodyne VLP-16) resulted in measurement errors of less than 0.04° and 1 mm for rotation and translation alignment, respectively. The proposed method can be efficiently applied to low-resolution LiDAR and provides higher accuracy and precision than a similar static inspection method that uses a planar target.

Paraview connectivity series#

Instead of directly extracting the vertices from the point cloud data, the points are estimated accurately through a series of processes that find the best-fit orthogonal planes and their intersections.

The LiDAR pose from the target frame, and consequently from the vehicle body, is estimated by using the 3D positions of the vertices of the cubic target. The proposed inspection system is a one-shot and static method that uses only a single cubic-shaped target at a close range of less than 2 m. This study aimed to develop an automatic and accurate inspection system for LiDAR alignment for application in the automobile industry.

0 kommentar(er)

0 kommentar(er)